Diving Into Kubernetes: Preparing for the CKA

Preparing for The CKA & Diving into the Kubes

I recently passed the Linux Foundation’s Certified Kubernetes Administrator (CKA) certification and thought I’d throw some notes together on how I prepared. The CKA is a hands-on practical test. There are no multiple-choice questions, just raw application of Kubernetes knowledge.

Overall I enjoyed the CKA, and find that the questions asked in the exam challenges real-world examples of the day-to-day task I run into with Kubernetes.

This blog post will overview the prep I took before taking the CKA along with learning more about the Kubernetes ecosystem along the way. I wanted to more than just “pass the exam”, so this will detail my experimentation with various tools in the Kubernetes ecosystem.

Previous Experience with Kuberentes

In 2020 I experimented with porting Hack Fortress from a Docker-focused workload deployment to a Kubernetes. Due to our needs for the CTF, it seemed like overkill at the time, but this was my first deep dive into the Kubernetes world. While I’m fairly new to the Kubernetes ecosystem, I have been involved with automating and deploying container workloads since ~2014. As I slowly get deeper into Kubernetes at work, I thought obtaining the CKA made sense and that was the initial motivation.

Playing with Kubernetes, Where Do I start?

When looking at how to stand up a local dev environment for Kubernetes, it’s fairly easy to become overwhelmed by the number of different “developer-focused” Kubernetes distributions. For example, off the top of my head, there’s

- Kind

- Microk8s

- Minikube

- Docker Desktop k8s

- others?

And then as you branch into “production ready” (some offer commerical support) Kubernetes distributions you’ll quickly find:

- RKE

- k3s

- k0s

- Kubernetes via kubeadm

- OpenShift/OKD

- Various Cloud provider Solutions (48 at the time of this writing)

- 68 other Kubernetes certified distributions

Across all of these distributions, the Kubernetes APIs are the same (or they should be to my knowledge). Vendor-specific distributions have their own twist on things that you should be aware of for production workloads. For example, the default network overlay for k3s, flannel, does not support network policies. However, k3s ships with kube-router for policy enforcement. This may or may not be a deal-breaker, depending on what you’re looking to accomplish.

As you branch into cloud environments the shared-responsibility model is…interesting to say the least. Understanding where the cloud providers’ responsibilities end and the end-users begin is super critical when looking at long-term infrastructure investment.

I’ve experimented with a couple of these distributions (detailed below) largely out of curiosity. It’s interesting to me to see which features are supported across all distributions, and where discrepancies come into play (largely k/v backends, CNI/CSI). As a former Linux “distro hopper”, experimenting with various Kubernetes distributions was just a natural extension of that behavior.

Setting Up A Local Dev Environment - Minikube & Microk8s

For simple development environments, my personal favorite was Minikube. Minikube has libvirt integration to spin up worker nodes as actual stand-alone VMs. While you could opt for something simpler and just run “worker-nodes” as Docker containers, having dedicated VMs allows you to experiment with node failure situations and see how Kubernetes deployments rebalance once a worker node goes offline. For basic solutions of deploying pods, understanding Kubernetes primitives, you will go far with Minikube. If you’re looking to match your dev environment closer to what’s in your production environment both your CRI and CNI(--cni) can be configured as well. Looking for more advanced features? Minikube supports additional addons that can be seen via minikube addons list.

These packages include gvisor, metallb and istio.

If you’re a Ubuntu user, Take a look at Microk8s. Maintained by Canonical, and installed as a snap package, this by far was one of easiest installation methods I’ve had with Kubernetes. Notably, the plugins that Microk8s supports make it very compelling as well. During early experimentation my desktop had a Nvidia GPU and enabling the GPU package seamlessly made it possible to deploy GPU focused workloads. It was as easy as executing microk8s enable gpu. That being said, I didn’t have any GPU focused workloads to deploy.

If I did, microk8s would be my go to.

Learning the Concepts

The Kubernetes documentation is the single source of truth to the Kubernetes project. Going through the documentation and associated tutorials is a great way to understand fundmental concepts and deployments. However, just reading the documentation can be quite boring. Having a way to leverage your dev environment with self assigned “mini-tasks” is a great way to keep engaged and ensure you have a rapid feedback loop for learning.

For example, after reading about scheduling, go forth and schedule a deployment across your multi-node test environment (as discussed with Minikube). Then, go forth and destroy nodes. Watch the generated Kubernetes events via kubectl get events. You don’t need complex apps deployed to experiment with, and if you’re looking for something out of the box, the Google Kubernetes Engine tutorials are available at this Github repo.

As recommended by several individuals on Reddit’s /r/kubernetes subreddit, I purchased Mumshad Mannambeth’s Certified Kubernetes Administrator course on Udemy to have a more structured learning path. I can’t think of a single resource to recommend more. The lessons are broken down into bite size chunks, and hands on labs allowed for a very structured approach in preparing for the CKA. I had a lot of ideas for “mini-tasks” spawn from watching Mumshad’s course content as well.

When registering for the Linux Foundation’s Certified Kubernetes Administrator course you receive two killer.sh practice exams. This platform is awesome for practicing before taking the CKA. I bought additional passes to focus on my ability to tackle the questions within the time frame. These practice tests combined with the Udemy course’s pracice tests were sufficient practice material for the CKA.

Between killer.sh, the Udemy course’s pracitce exams and example labs in addition to self given “mini-tasks”, there’s more than enough material to become familiar with the Kubernetes ecosystem and feel comfortable with what the CKA syllabus lays out for material required to pass the test.

Side Projects - Build, Deploy, Break and Learn

In addition to the courses described above, I did a handful of experimentation in my homelab. I found these to be helpful in solidifying core concepts as well as experimenting with things not covered on the CKA.

Deploying various Clusters

I originally had deployed in my homelab a kubeadm bootstrapped 3 node cluster. This gave me hands on experience with leveraging kubeadm for setting up nodes as well as deploying a CNI. To make further deployments easier, I built basic Ansible roles for Ubuntu server 20.04. After experiementing a bit, I eventually came across KubeVirt is an interesting solution that seeks to make it easy to manage virtual machines and containers via kubectl. I haven’t experimented with KubeVirt sufficiently to have an opinon on it. However, I am looking forward to further seeing how the project evolves.

Next, I deployed k3s. Why have multiple types of clusters? K3s is by far an easier way to deploy a Kubernetes cluster. While k3s by default uses sqlite3 for a backend and not the standard etcd, this doesn’t matter much for my own experiments. However in a production cluster with hundreds or thousands of objects, having a distributed key/value store may make more sense for in-memory object storage than consistently accessing the disk. Per offical documentation, k3s comes “batteries-included” by offering the following components out of the box:

- Ingress controller (traefik)

- Embedded service loadbalancer

- CNI via Flannel

- CoreDNS

- Containerd support

- …

In a kubeadm bootstrapped environment, these components would have to be manually installed and depending on your underlying hypervisor there may be more considerations for integration (thinking VSAN for storage/various networking constraints/etc..).

Within both clusters I would pracitce creating various kubernetes objects and modifying them in place. The killer.sh exams give a great foundation of some tasks to re-create on your own cluster. I won’t go into said tasks as they’re a paid service.

ARM Deployments - k3s/k0s

During my research of Kubernetes distributions, I came across k0s. K0s, bundles all the aspects of the Kubernetes control plane into a single shippable binary (as does k3s). This makes it very easy to get up and running for both dev and production devices. While microk8s also can be installed on ARM patforms, I experimented with deploying k0s on Raspberry Pis I had laying around. The install guide is dead simple, and you can start experimenting with deploying on a single instance within thirty minutes.

K3s also is shipped in a single binary and supports ARM platforms. A notable difference between the k3s and k0s distributions are the components (ingress controller/load balancer) that come bundled out of the box, as well as the default CNI provider. K0s leverages Calico whereas k3s uses Flannel.

From a conceptatual standpoint, I think both K0s and k3s havea lot of practical usecases beyond a “traditional” bare metal deployment. A single binary running all of the control plane pieces can be very interesting for those in IoT space. I have a side project I’m currently working on with k0s that I’m super excited about and will be reporting back on shortly. So I’ll save a deeper dive for another time

Lens, seeing is believing

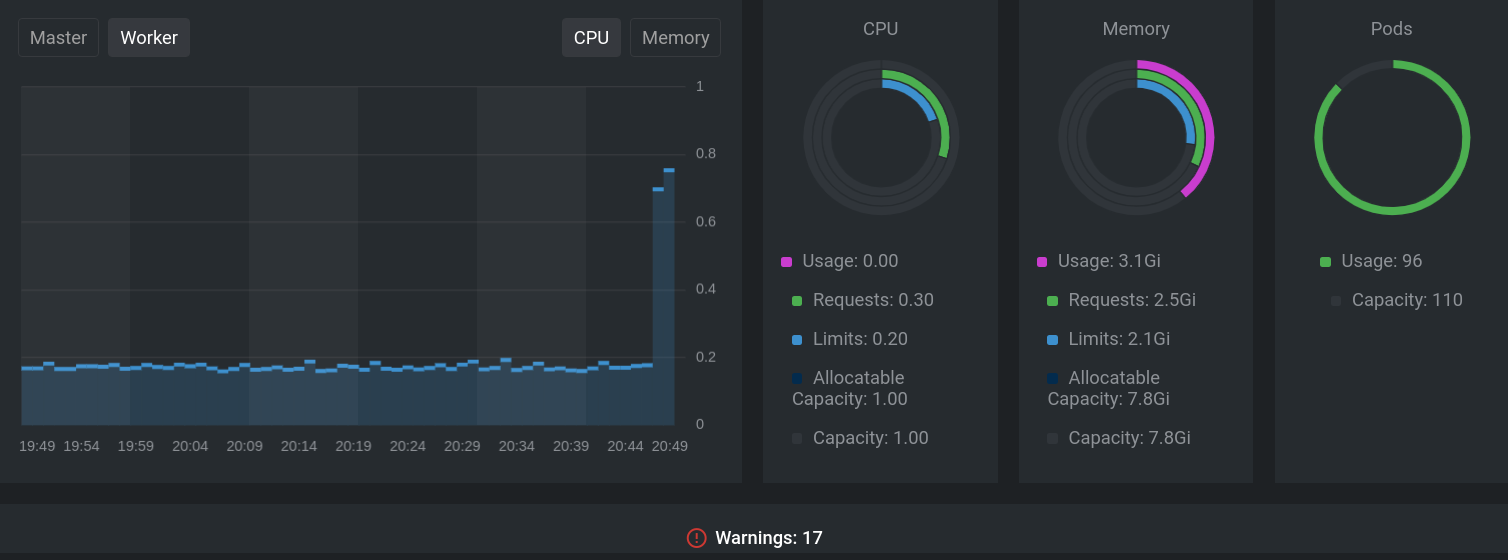

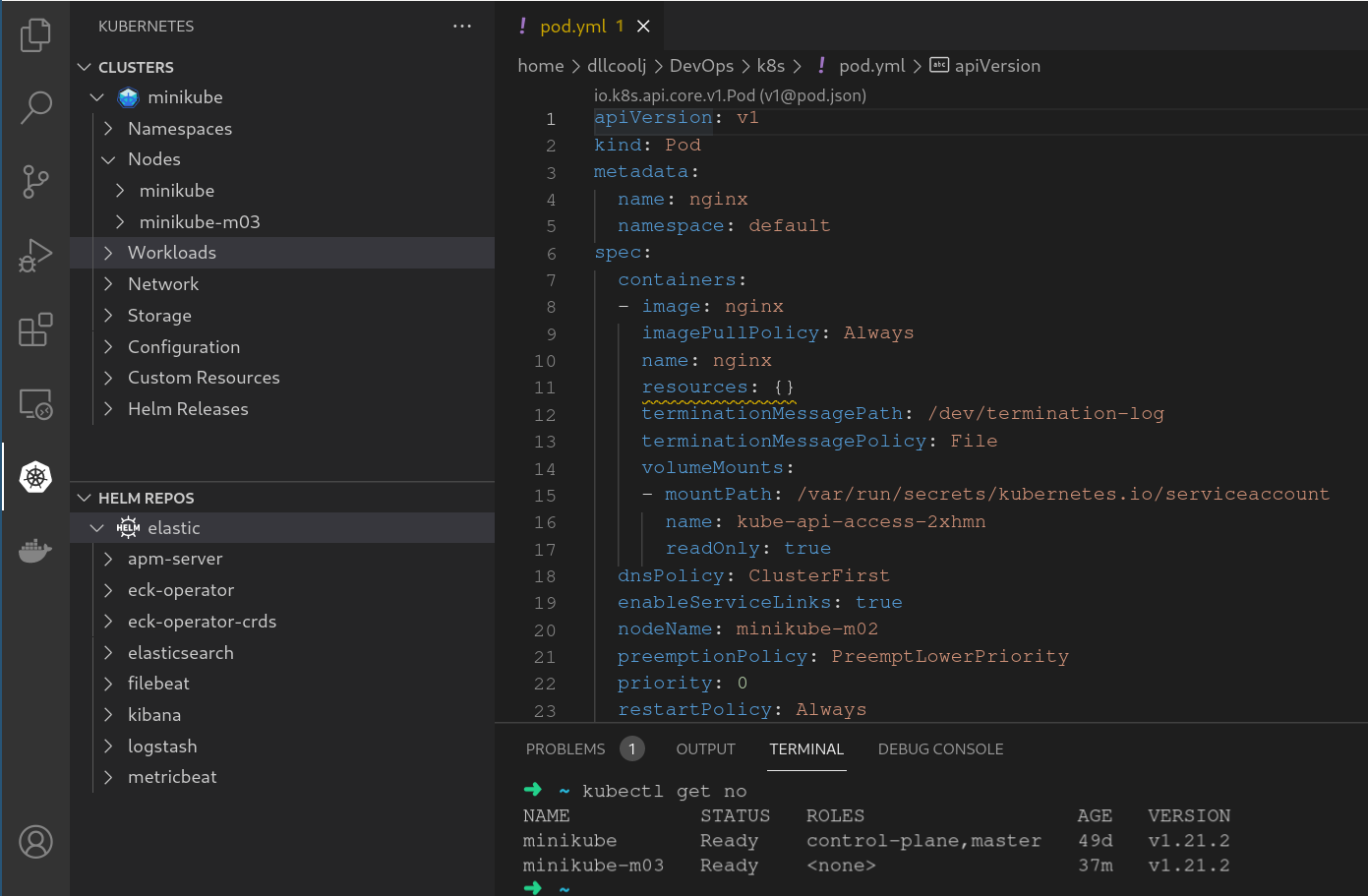

Knowing how to use kubectl efficiently is important to performing well on the CKA exam. That being said, understanding what other solutions exist in industry from vendors web UI to open source solutions is good to know for managing clusters day-to-day. If you haven’t experiemented with Lens yet, you should put that on your todo list. Lens hooks into Helm registries to easily deploy charts. After deploying the prometheus chart, the Lens IDE will start receiving metrics from the deployed workloads. The image below shows a snapshot of the current status of my k3s cluster.

Visual Studio Code has plugins for manging Kubernetes environments. The YAML plugins can help rapidly create templates for deploying Kubernetes objects. However, you will not have these utilities when taking the CKA. These are great tools to be aware of for day-to-day tasks, but at the end of the day kubectl is key to administering an environment.

Beyond The Blog - Into The Kube

There’s far more to Kuberentes than the CKA could ever cover. Further experimenting with various cluster distribution, monitoring solutions, and service meshes are on my TODO list. At heart, I’m a big Linux nerd and the Kubernetes project has enough complexity to keep one busy for quite sometime. If you found this blog interesting please share, if you found some discrepancy, please reach out! (@DLL_Cool_J).